Overview

Gesture is a Virtual Reality experience that introduces British Sign Language (BSL) through immersive interactions and dynamic conversations. Designed to bridge communication gaps, this project offers an engaging way to learn BSL by placing players in interactive scenarios where they can practice signing in a natural and intuitive environment.

Used tools

Figma

Status

In progress

Content

Context & Research

Recent research from RNID has highlighted a significant lack of awareness among the public regarding effective communication with deaf individuals who use British Sign Language (BSL). This lack of knowledge deeply contributes on the daily challenges faced by this community. According to some statistics:

Competitors

Current solutions to learn BSL rely mainly on conventional methods like structured courses by institutions. Online resources on the other hand include mainly videos-lessons. As a visual and physical language, BSL can be difficult to learn through static images or videos alone.

Problem

Problem 1

Complex navigation.

Users struggle to find key information quickly — especially during high-pressure events.

Problem 2

Poor visual hierarchy.

Dense text and technical data make it difficult to scan and prioritize key insights.

Problem 3

Lack of personalized dashboard for user-specific insights.

users can't tailor information to their regions, hazard types, or operational priorities.

Problem 4

Weak mobile experience.

Many users rely on mobile devices in the field, but the current platform isn’t optimized for them

Problem 5

Underused advanced features. Advanced features like APIs, exposure estimates, and analysis tools are difficult to access or understand due to poor onboarding.

Ideation

This phase focused on creating a well-structured information architecture, refining user flows, and organizing features into coherent clusters to enhance usability and clarity.

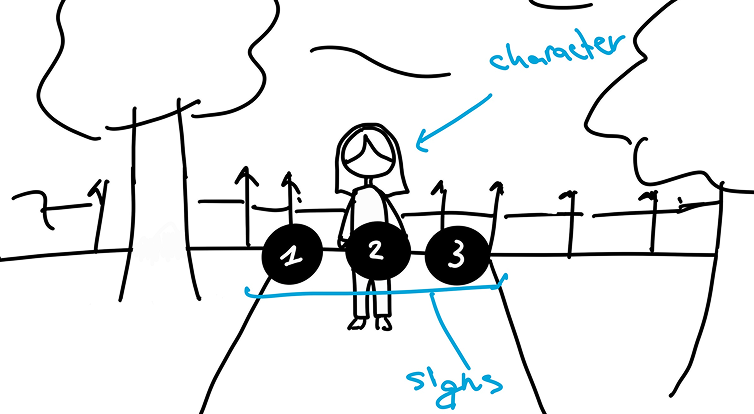

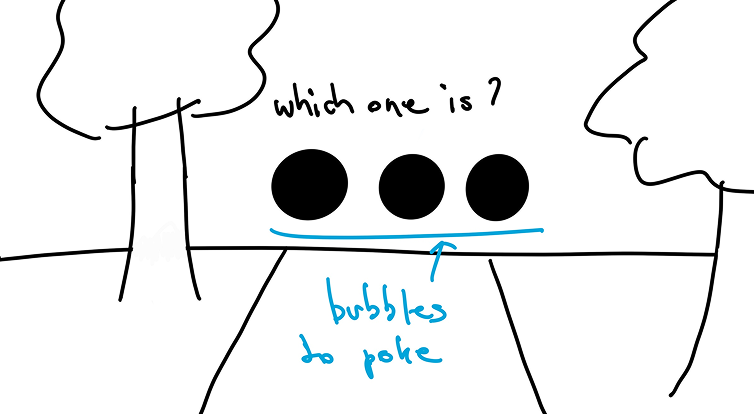

wireframes

Solution

Settings

The game environments are intended to be familiar in order to make the user comfortable and relate to everyday life moments.

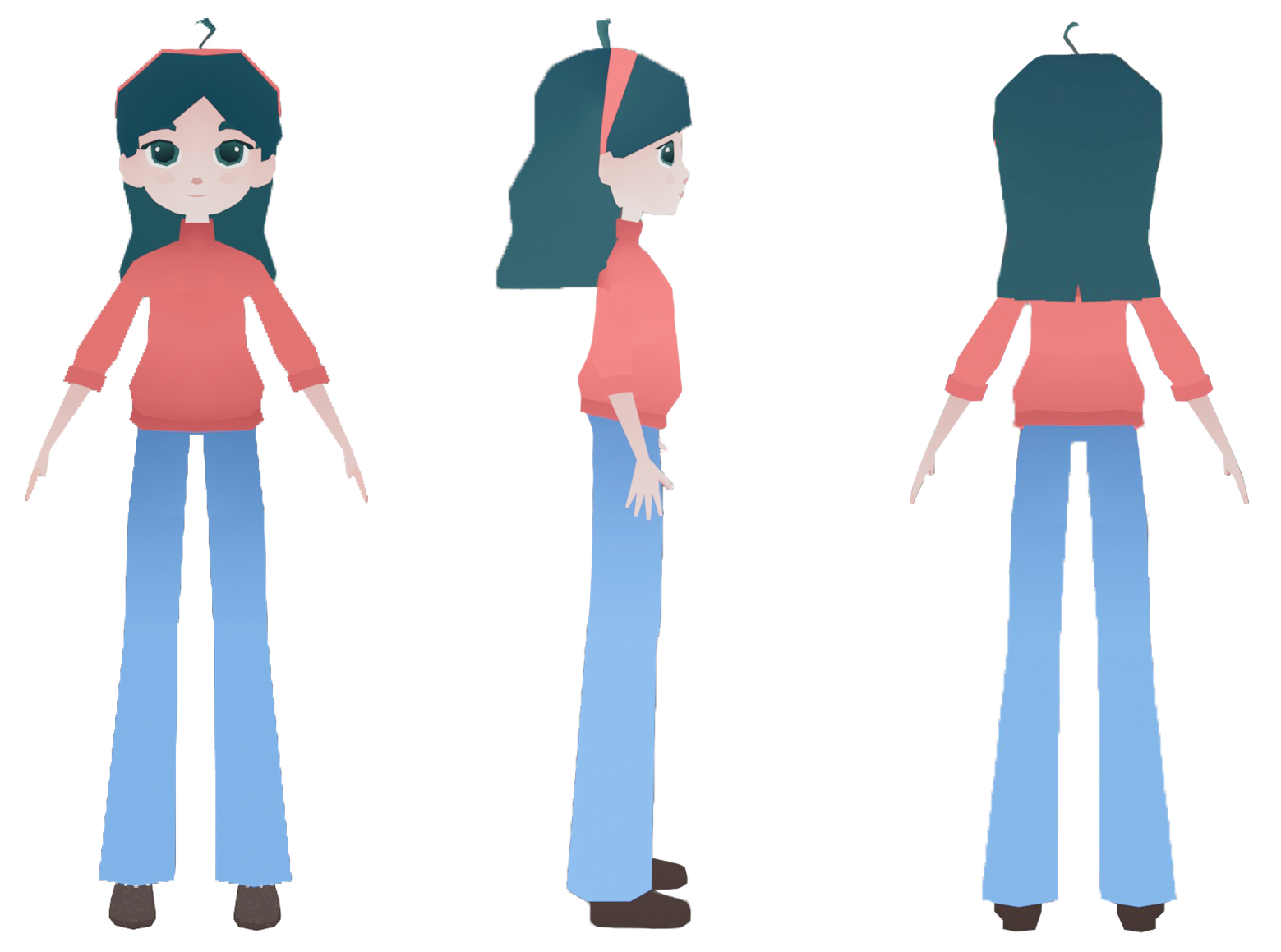

character

The main character is a girl who represent the players interlocutor. She has a friendly and familiar look.

Her design is very simple as it allows future customisation for different settings.

Development

The development involved the use of Unity as game engine and Meta Quest 3S for VR development. Thanks to the Meta Interaction SDK toolkit, I could develop custom gesture recognition system that detects users signing.

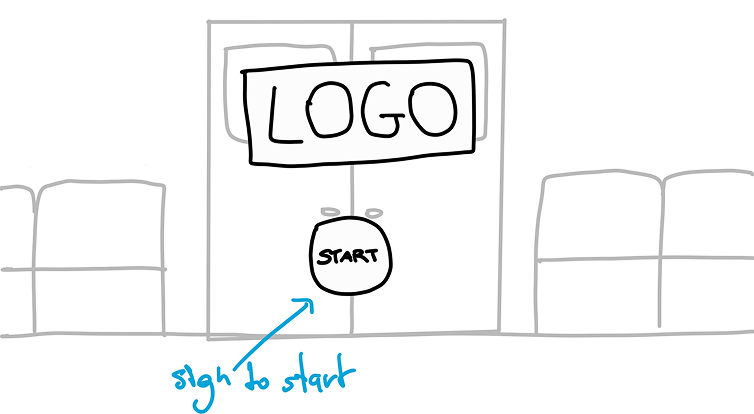

Start

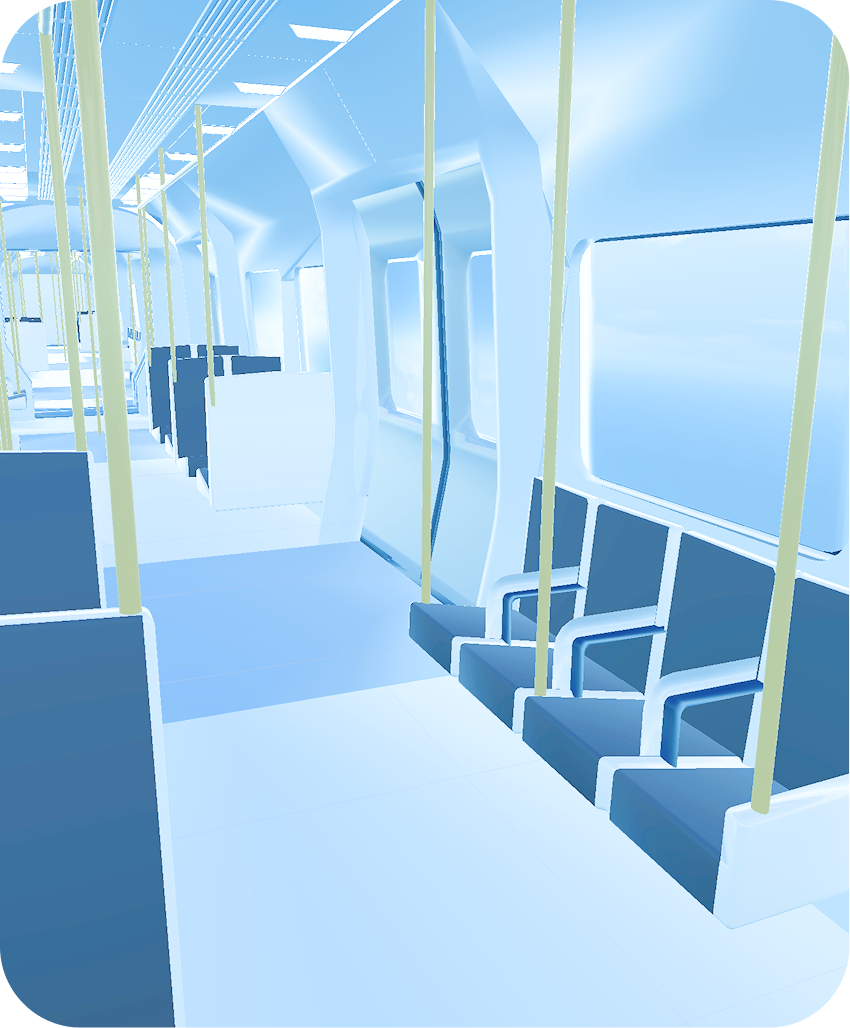

The lobby of the game recalls the environment of a tube

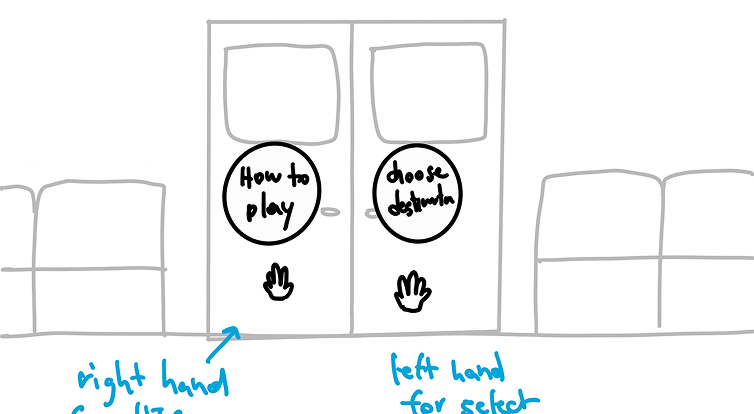

Select lesson

To navitate across, throughout the levels, the players can use the map.

Follow the signs

Each lesson simulates a conversation with the character and the player can follow the suggested signs in order to interact.

Test yourself

At the end of the lesson, the player can test what she/he has learned throught a quiz.